Exploring the Intersection of Art and Machine Intelligence

February 22, 2016

Posted by Mike Tyka, Software Engineer

In June of last year, we published a story about a visualization technique that helped to understand how neural networks carried out difficult visual classification tasks. In addition to helping us gain a deeper understanding of how NNs worked, these techniques also produced strange, wonderful and oddly compelling images.

Following that blog post, and especially after we released the source code, dubbed DeepDream, we witnessed a tremendous interest not only from the machine learning community but also from the creative coding community. Additionally, several artists such as Amanda Peterson (aka Gucky), Memo Akten, Samim Winiger, Kyle McDonald, Gene Kogan and many others immediately started experimenting with the technique as a new way to create art.

|

| “GCHQ”, 2015, Memo Akten, used with permission. |

|

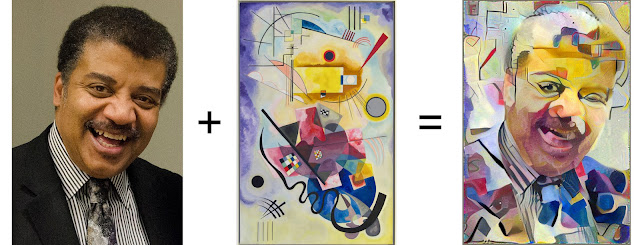

| The style transfer algorithm crosses a photo with a painting style; for example Neil deGrasse Tyson in the style of Kadinsky’s Jane Rouge Bleu. Photo by Guillaume Piolle, used with permission. |

|

| “Saxophone dreams” - Mike Tyka. |

These are exciting early days, and we want to continue to stimulate artistic interest in these emerging technologies. To that end, we are announcing a two day DeepDream event in San Francisco at the Gray Area Foundation for the Arts, aimed at showcasing some of the latest exploration of the intersection of Machine Intelligence and Art, and spurring discussion focused around future directions:

- Friday Feb 26th: DeepDream: The Art of Neural Networks, an exhibit consisting of 29 neural network generated artworks, created by artists at Google and from around the world. The works will be auctioned, with all proceeds going to the Gray Area Foundation, which has been active in supporting the intersection between arts and technology for over 10 years.

- On Saturday Feb 27th: Art and Machine Learning Symposium, an open one-day symposium on Machine Learning and Art, aiming to bring together the neural network and the creative coding communities to exchange ideas, learn and discuss. Videos of all the talks will be posted online after the event.

-

Labels:

- Machine Intelligence